More here.

12/31/19 update:

More here.

In June earlier this year, the Supreme Court rejected the Trump Administration’s plan to add a citizenship question to the 2020 U.S. Census. Observers worried about the chilling effect this question’s inclusion would have on Census participation–particularly among immigrants and Hispanics–and so this outcome appeared to resolve concerns about Census counting and its implications. But many say the damage might have already been done in dissuading people from participating in the Census.

One lingering repercussion could be in fostering misperceptions about the Census. Given how much the Census citizenship question was in the news in the last year, and how at times its inclusion seemed certain, perhaps some Americans were left believing the 2020 Census will indeed ask about citizenship status. An incorrect view like this is still significant as past evidence suggests views about the Census and whether it asks about citizenship could factor into intended participation:

With this concern in mind, I wanted to see whether people believed the 2020 Census would include a citizenship question–long after the Supreme Court established it would not. In late October, I asked the following question to 7,966 participants on the Civis Analytics online panel:

“The past year has seen debate over whether the Census should ask people if they are citizens of the U.S. To the best of your knowledge, will the 2020 U.S. Census include a citizenship question?”

Answer options: Yes, No, Not Sure

The data included several demographic and political covariates. To make the sample reflect the national population, I weighted the data to match national 18+ citizen population characteristics for race, age group, gender, and education (thanks to G. Elliott Morris for providing benchmarks for this).

The below graph shows results for the entire sample, accompanied by 95% confidence intervals.

40.0 percent of American adults think that the 2020 U.S. Census will include a citizenship question. A similar amount express uncertainty in saying they are not sure (35.2 percent), while only about a quarter correctly respond to the question.

Demographic subgroup breakdowns below reveal some interesting wrinkles. The below graph plots percentages for different responses options (Yes / No / Not Sure) going across and different subgroups going down.

Hispanics are not more likely than whites–and not much more likely than other racial groups–to hold this incorrect belief. Many still do, though, and this is notable given this belief about the citizenship question is more likely to affect them.

The largest determinant of holding this incorrect belief happens to be Republican partisan identification: 51.3 percent of Republican say there will be a citizenship question on the 2020 Census, while fewer Democrats (35.0) and Independents (26.0) say so. Given the citizenship question’s attachment to Trump and his administration, this political cue likely explains the higher support among the Republican mass public. This fits with patterns for other factual beliefs, and makes views on this issue begin to resemble other misperceptions. In hindsight, I should have asked about strength of belief in respondent’s answers and whether they have heard about this issue before, as this would better distinguish between factual knowledge levels and truly held misperceptions. (Small note: In results not shown here, I test whether “Yes” responses among Republicans increase as education–a proxy for political sophistication–goes up, as this would mirror a pattern from other cases of misperceptions. The expected interactive effect does not emerge however.)

To round out subgroup breakdowns, along age, few notable differences outside of younger individuals being more uncertain exist. Those with the highest education level (college+) correctly answer the question (saying “No”) most. Greater “Not Sure” responses from females fits with past political science research and suggests this item is operating like a political knowledge question.

Takeaways and Caveats

Results here show a sizable percentage of the American public–nearing a majority–believes that the 2020 U.S. Census will include a citizenship question, months after the Supreme Court ruled the Trump administration could not add this to the Census. This is important in light of past evidence showing this question’s inclusion dampens reported intent to take the Census among Hispanics. At the same time, a partisan dimension exists to this belief, as over half of Republicans believe that the next Census will include a citizenship question.

A few caveats for these results are worth keeping in mind. Although I applied weights, this is still not a purely nationally representative sample, so I would warn against an exaggerated focus on the precise population rates. Second, acquiescence bias and uncertainty about the Census could distort results. People might be prone to offer an affirmative response when pushed into giving an answer. Given the nature of the Census and that citizenship seems like another personal question that would make sense appearing there, inclusion of the citizenship question might sound like the right answer and would be obscure to many regardless of whether it was debated heavily in the last year or not. This means that the rate of belief I find is not solely attributable to the Trump administration’s recent effort to add the question to the Census.

In the realm of misperceptions, researchers sometimes fabricate a survey item to see if respondents would express belief in anything, and compare that to belief rates for legitimate items (see here and here for examples). In hindsight, and motivated by these strategies, I should have asked respondents about whether they thought other items would be on the Census–a bogus item as well as a more believable one, such as religion.

Over at The Upshot (New York Times), I wrote about a recent survey experiment that I ran. I randomly exposed people to a short summary of recent Democratic debates—and the various left-leaning proposed policies—or other unrelated political content, and measured various reactions regarding the 2020 election. Most notably, I find evidence of backlash among Independents in their intended vote choice, moving against the eventual Democrat nominee after they read about the sharp left turn taken by current Democratic candidates. In this post, I wanted to record details on the survey experimental design and methods.

Continue reading “What Democrats Could Lose With Their Left Turn (New York Times/The Upshot)”

Ahead of the 2020 election, there’s been debate over whether the Democratic Party should try to win over voters who switched to Donald Trump in 2016 — after not having voted in Republican in 2012 . Where these Trump switchers stand politically might matter in this calculus, as certain analyses argue.

I turned to the Voter Study Group panel (using the data released in 2018) to get a sense of these switchers in comparison to all Clinton and Trump voters. Using survey responses on the same individuals, I was able to check these three key groups — Clinton voters, Trump switchers, and Trump voters — 1) for various political beliefs and 2) before, right after, and in years following the 2016 election. Because of its panel nature (thousands of individuals reinterviewed in 2011, 2016, 2017 and 2018), the data can offer unique insights — like opinion measures on Trump switchers ahead of time (five years before the 2016 election). The below graph shows the results. Overall, it’s a mixed bag, but these Trump switchers resemble typical Trump voters more so.

Here’s a quick roundup of the most interesting results:

Political observers often expect major political events to have ramifications for public opinion. The recent release and aftermath of the Mueller Report introduced yet another one of these scenarios. Damaging findings against Donald Trump appeared destined to harm the president’s image. To many, this expectation materialized: in at least a few polls since the report’s release, Trump’s approval declined, with a result from a Morning Consult/Politico poll attracting particular attention:

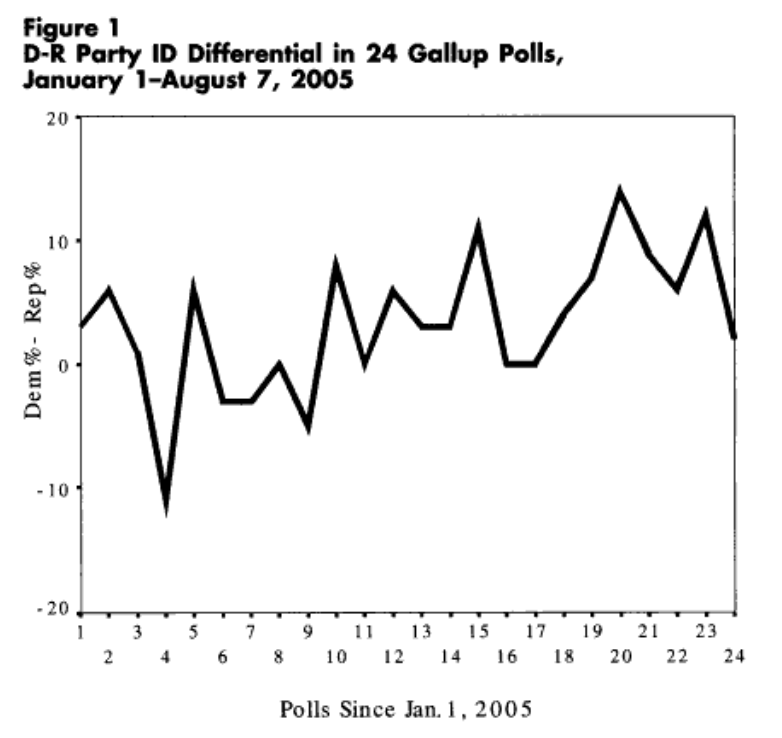

However, polling movement such as this may not always be as it seems. Fluctuations in the partisan composition of a poll’s sample can often create a mirage of public opinion change. This could be due to a few reasons. For one, random variation in partisan composition from one sample to the next could meaningfully shape outcomes like vote choice and presidential approval. Here’s an example of such drastic variation from Abramowitz (2006):

Secondly, beyond just general sampling variability, differential partisan nonresponse bias could be at play. As many have documented (and how I’ve discussed before), partisans’ willingness to partake in political surveys can vary over time. A collection of evidence points to a role for the news and political environment in shaping this willingness and how it varies by party. It’s worth briefly discussing each of these past bits of evidence.

News Environments and Partisan Nonresponse

For example, during the 2016 election, Republicans became less likely to participate in polls when things were going badly for their candidate in Trump (i.e. negative news and controversy, such as the period after the Access Hollywood video release). Gelman et al. (2016) show something very similar for the 2012 election in the time periods surrounding candidate debates (e.g. Democrats becoming less likely to take surveys following a supposedly poor first debate performance by Barack Obama), and describe opinion swings as “artifacts of partisan nonresponse.” Newer (preliminary) research from Mark Blumenthal using SurveyMonkey data appears to show evidence of differential partisan nonresponse in 2018 midterm polling (taken from his abstract):

“SurveyMonkey’s tracking surveys in the Fall of 2018 show a similar pattern. For roughly three weeks following the Kavanaugh/Blasey-Ford hearings in late September, respondents who approved of President Trump – the second question asked on every survey – were more likely to complete surveys than respondents who disapproved. These same surveys showed increases in Republican party identification, Trump approval and a narrowing of the Democratic lead on the generic U.S. House ballot, apparent trends that all regressed to near their prior means in mid-October when the differential response patterns faded.”

In all these cases, differential partisan nonresponse had large implications for horserace results, overstating swings in vote intention and changes in candidate fortunes. Evidence like this is nicely complemented by work in progress from Jin Woo Kim and Eunji Kim. They argue that people pay attention and express interest in politics more when their partisan side is doing well, and less so when their side is doing poorly. For example, using ANES survey data since 1952, Americans who share the partisanship of a well-performing president become more politically interested than out-partisans. As another case study, Kim and Kim use the 2008 Lehman Brothers bankruptcy filing–a source of negative news for Republicans, the party in power–as a natural experiment, and show Republicans paid less attention to politics after this event that created a negative news environment for Republicans (the party in power).

In sum, several pieces of evidence point to differential partisan nonresponse bias as a key shaper of prominent survey outcomes like vote choice. At the simplest level, the partisan composition of a poll’s sample matters a lot for politicized outcomes that are heavily correlated with partisanship. But most pollsters shy away from addressing these issues, as–perhaps most importantly–there’s not straightforward weight for “partisan composition.” Partisanship as a variable is very stable at an individual and aggregate level, but can still vacillate, and no clear benchmark for it exists. Consequently, most pollsters seem to frown upon this weighting option. Weighting to vote choice–for which there’s a known distribution, the margin in the most recent election–represents another option. But given that most pollsters don’t use longitudinal panels, they’d have to rely on recalled vote choice, which is often not viewed positively–people may forget their past vote or recall it in a biased manner (though I’d argue this is a flawed common belief, as the best available data suggests recall is very accurate).

Sample Composition Effects in Trump Approval Polls

Given these issues with possible corrections, most pollsters proceed without adjusting their samples along some partisan dimension. In data I’ve analyzed over the last few years, this decision has implications for polling results, and can readily observed without microdata. The tendency seems to have extended beyond vote intention polls–as much of the aforementioned research focused on–and to approval ratings (for Trump). Specifically, I’ve been using crosstab figures to see how a poll’s partisan composition (the relative distribution of Democrats and Republicans in a sample) relates with Trump’s approval level (e.g. approve% minus disapprove%), and whether this also varies by polling methodology. The distinction on methodological decisions I make is whether a pollster includes a weighting correction for partisanship, past vote choice, or something along those lines. During the first half of 2017, I came up with the following graph:

On the left, partisan composition and Trump approval had no relationship among polls that corrected for their sample’s partisan composition in some way–in other words, taking a step to address partisan nonresponse bias. The right panel, however, shows a fairly strong relationship between the two variables among pollsters that didn’t correct for partisan composition. In essence, their Trump approval numbers came in part to reflect the balance of Democrats and Republicans in their sample, and less so the assessment of Trump, as intended. The fewer Republicans that happened to take a survey (perhaps because of negative news surrounding Trump during his first term), the worse Trump’s numbers looked. Such opinion movement–typically interpreted as minds changing about Trump–would thus turn out to be illusory. (I took an alternative look at Trump approval numbers and also a look at Obama’s approval numbers in other posts with the same overarching topic in mind, and came to similar conclusions.)

Implications for Post-Mueller Report Approval Polls

Circling back the original subject of this post, it’s worth considering what all of this past literature and evidence means for polling after an event like the Mueller Report release. In generating an environment with plenty negative news for Trump, this situation seems primed for a partisan nonresponse dynamic. Namely, mass Republicans might start paying less attention attention to political news in light of negativity swirling around their co-partisan president. Given that taking a poll is itself a political act and a form of expressing oneself politically, this period could easily make Republicans disinclined to participate in polls (whereas Democrats, seeing a more congenial news environment that damages an out-party president, may be more likely to partake in them). In turn, as past examples have taught us, Trump’s approval might decline as a result during this period, but in fact be an artifact of sampling and partisan nonresponse.

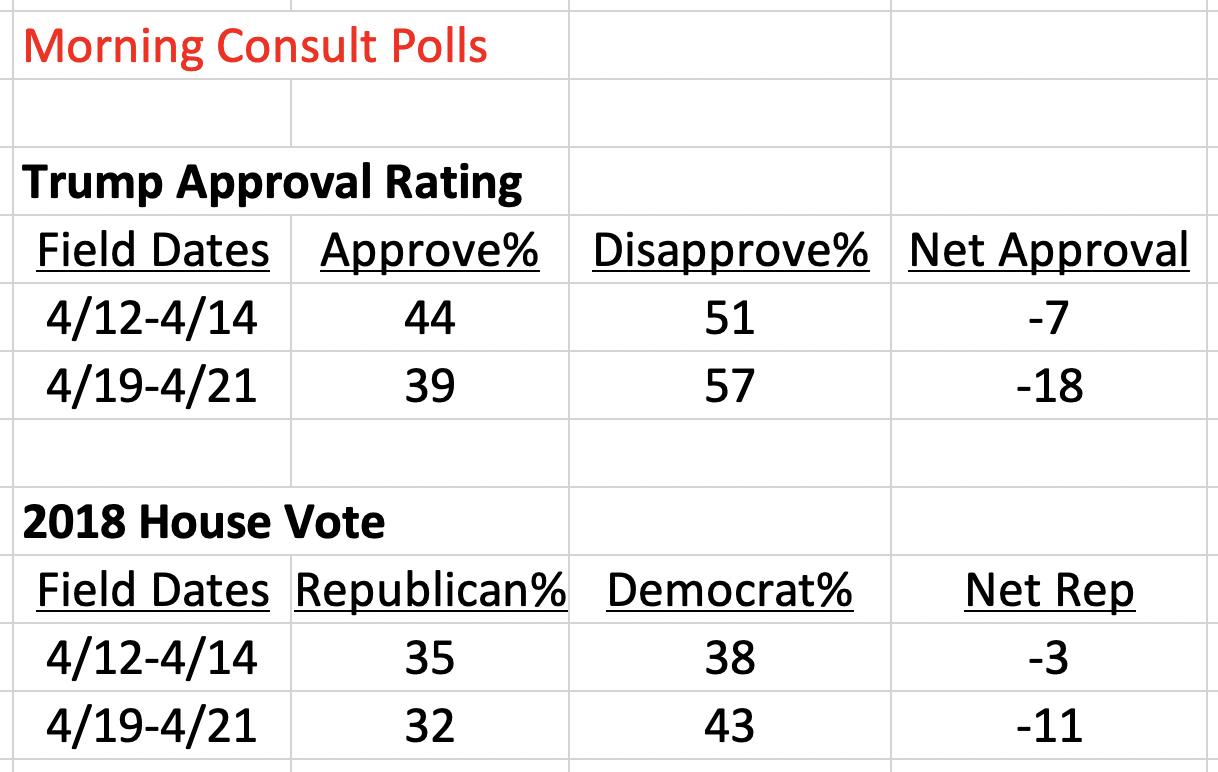

The recent result from the Morning Consult poll prompted me to look into the data. The poll showed the worst mark for Trump in all of its weekly tracking history, and the -18 net approval was a sharp drop from a previous -7 net approval. Could changes in the partisan composition of the poll–indicative of partisan nonresponse patterns–be accounting for this drop? To get a sense of this, below I look at the pre- and post-Mueller report release polls and track Trump’s approval and 2018 House vote choice numbers in each. House vote distribution here aims to capture underlying partisanship distribution in the sample (similar trends result using partisanship instead of vote choice):

From the period before (4/12-4/14 poll) to after the Mueller Report release (4/19-4/21 poll), the partisan composition of Morning Consult’s polling sample becomes noticeably more Democratic. After being a net -3 points Republican before the report (three percentage points more Democrat than Republican), it becomes a net -11 points afterwards. At same time, as noted before, moving across these polls also reveals Trump’s net approval worsening by 11 points. Although a crude comparison and other factors could be at play, the relationship is pretty clear: as the partisan composition of the poll changes, so too does a politicized outcome like Trump approval. The sample becomes eight net points less Republican, and Trump’s approval declines by a net 11 points. It’s worth noting that unless people are suddenly much more likely to misremember their 2018 House vote (which available data suggests is generally unlikely) then the effect likely flows from partisan composition to Trump approval ratings. Differential partisan nonresponse bias–perhaps spurred by a negative Trump news cycle that dissuaded Republicans from participating in polls–seems to play a role here. Though that’s a specific mechanism that this data can’t precisely isolate, at the very least, fluctuations in Morning Consult samples’ partisanship are strongly influencing their Trump approval results–overstating real opinion change in the process.

Not surprisingly, Morning Consult does not include any explicit correction for the partisan composition of its samples, at least according to its public methodology statement:

Decisions like this, as suggested by my earlier analysis, make polling results most susceptible to partisan nonresponse bias. In other analysis, I ran the same comparison–partisan composition vs. Trump approval–split it up by individual pollsters, as by mid-2018, there was a large enough sample for some pollsters to perform this analysis. The plot immediately below shows this comparison across different pollsters:

Positive relationships emerge in most cases. One of the exceptions is notably YouGov, which weighted to 2016 vote choice in their polling. Morning Consult, on the other hand, shows the strongest relationship–its Trump approval numbers are most strongly affected by the partisan compositions that they happen to get for their samples. To put a numeric estimate on this, below I show OLS coefficients from regressing net Trump approval on net Republican% within each poll:

Results here confirm Morning Consult as the pollster most susceptible to partisan composition effects. A one point net Republican increase corresponds to a 0.86 point net Trump approval increase; the relationship is nearly 1-to-1.

Conclusion

As I’ve reiterated throughout this post, working with crosstab data and attempting to derive meaning without the best possible resources (e.g. like the microdata here) has significant limits. But the presented evidence is still consistent with a growing body of evidence of differential partisan nonresponse bias. Partisans’ willingness to partake in polls varies, often having to do with how congenial the political news environment is to their own politics at a given survey’s field time, and this has big implications for important polling outcomes like vote intent and presidential approval. When confronted with large swings in pre-election polls or approval numbers, observers should always first consider how the partisan balance of those polls’ samples looks and has changed since its previous sample. Otherwise, real opinion change could be confused with sampling artifacts. This also happens to fit nicely with many political science lessons on the stability of central political attitudes in the current age (i.e. partisanship, vote intent, and presidential evaluations); the “bar” for viewing opinion change in these variables as meaningful should be set high. A passage from Gelman et al. (2016) offers a good closing point:

“The temptation to over-interpret bumps in election polls can be difficult to resist, so our findings provide a cautionary tale… Correcting for these [nonresponse] biases gives us a picture of public opinion and voting that corresponds better with our understanding of the intense partisan polarization in modern American politics.”

Addendum 6/1/19:

See here for more data and analysis related to issues described in the above post.

With the recent release of 2018 CCES data, I extended the time series on racial resentment battery responses by party (filling in 2016, which didn’t include the standard RR items, with combined university team modules). White Democrats have continued a huge movement in the liberal direction on questions of race into 2018 (attitudes towards blacks here). In the CCES, it’s been an even larger shift over the last two years than in prior years.

Some context on this change (liberalizing racial attitudes especially among Democrats):

What’s driving this liberalizing racial attitude change? Some potential factors:

3/27/19 update: Check out a piece by Thomas Edsall in The New York Times opinion pages that includes the first graph above, and some discussion on it and other liberalizing social attitudes/trends in the U.S.

Background

In their article “Does Party Trump Ideology? Disentangling Party and Ideology in America” recently published in the journal American Political Science Review, Michael Barber and Jeremy Pope present a very compelling, important, and timely study. Investigating the extent of party loyalty and the “follow-the-leader” dynamic among the American public, the authors test how partisans react to flexible policy position-taking by President Donald Trump—and one similar case study for Barack Obama—with a survey experiment. The main finding is striking: on average, when Trump takes a conservative position on a policy issue Republicans express more conservative beliefs on that policy themselves, and when Trump takes a liberal stance Republicans too become significantly more liberal as a result. The latter exemplifies blind leader adherence best—even when Trump takes positions outside of his party’s mainstream ideology, mass members of his party still become more likely to adopt his stance.

What about Democrats?

A common reaction to this finding has been questions about partisan (a)symmetries. The study concerned Republican members of the public and their current leader in Trump most. Should we expect the same dynamic among Democrats in blindly following a comparable leader in their party? To address this, Barber and Pope discuss a robustness analysis towards the end of their paper (in the subsection “Robustness: Other Political Leaders as Tests”) that tests for leader effects among Democrats using Barack Obama as the cue-giver. Specifically, they exploit the close similarity between a new immigration asylum policy from Trump—introduced in the spring of 2018—and the policy stance by the Obama administration a presidency earlier (both policies said families/children, when arrested by the border patrol, will be held in a detention facility before an asylum hearing). The leader cues in support of the policy can thus be credibly interchanged (i.e. experimentally manipulated). In an experiment, partisans were randomly told either 1) that this is Trump’s policy, 2) that this was Obama’s policy, or 3) no cue, after which they expressed how strongly they agreed (a value of 5 on a 1-5 scale) or disagreed (1) with the policy.

Barber and Pope describe their results and the implications of them in the following way:

“The results show large effects for Democrats and smaller, but still statistically significant effects for Republicans…

…Democrats are also willing to adjust their preferences when told that the policy was coming from Obama…”

Separating out Ingroup and Outgroup Cues

Though not explicitly stated, the purpose of this robustness study is to test whether evidence of strong in-group partisan loyalty and influence from leaders within the same party appear for Democrats as well. Because partisans are exposed to both Obama and Trump cues, results from this experimental design can speak not only to ingroup dynamics, but outgroup dynamics as well. The analysis approach used in the article, however, cannot distinguish between these two forces possibly at play. This is because outcomes from the experimental control condition (no exposure to a leader cue) are omitted from the analysis. Specifically, to calculate the effects (appearing in Figure 6 in the actual article), the treatment variable makes use of just the Trump cue and Obama cue conditions. The displayed treatment effects are just the difference in policy opinion between these two conditions.

Original analysis

Below is a graph reproducing the results in the original article (with replication data) using the original analysis approach: regressing the policy opinion variable on a binary variable that—for Republicans—takes on a value of 1 if the cue comes from Trump and a value of 0 if it comes from Obama (and the opposite for Democrats). Thick bars represent 90% confidence intervals and thin ones are for 95% confidence intervals. (Note: The original article shows 0.22 instead of 0.23. This is due to differences in rounding up/down.)

Democrats agreed with the policy by 1.18 points less when told it was Trump’s compared to being told it was Obama’s. Republicans agreed with the policy by 0.23 points more when it came from Trump (vs. coming from Obama). It is not clear, though, whether these effects are driven more by partisans following ingroup leaders on policy (Democrats following Obama), or being repelled by outgroup leaders (Democrats moving away from Trump’s stance). Fortunately, this can be separated out. Instead of comparing average opinion levels between Trump and Obama cue conditions, it would be more informative to compare the average in the Trump cue condition to that in the control condition, and the average in the Obama cue condition to that in the control (and again, split by respondent partisanship).

Reanalysis

The below plot presents results from setting the control condition as the reference group in distinct “Trump cue” and “Obama cue” treatment variables, which predict policy opinion among Democrats (left-hand side) and Republicans (right). The Obama cue estimate and confidence interval appear in purple while those for the Trump cue appear in orange.

After breaking up the cue effects like this, the result for Republicans is no longer significant at conventional levels. The Obama cue treatment does not move their opinion much, while the Trump cue moves them 0.18 points more supportive, but the effect is not significant.

After breaking up the cue effects like this, the result for Republicans is no longer significant at conventional levels. The Obama cue treatment does not move their opinion much, while the Trump cue moves them 0.18 points more supportive, but the effect is not significant.

Of course, the key part of this study is opinion movement among Democrats. In making use of the control condition, this reanalysis reveals that the outgroup leader effect from Trump is nearly twice as large as the ingroup leader effect from Obama on mass Democratic opinion, though both dynamics are at play. When told the immigration asylum policy was Obama’s policy during his presidency, Democrats become 0.42 points more supportive relative to the control. This effect is statistically significant, and provides evidence of what this robustness study was seeking: mass Democrats following their own leader on policy. When told the policy is Trump’s, Democrats react more strongly, becoming 0.76 points more opposed to the policy compared to the control (also statistically significant). To sum up, the ingroup follow-the-leader effect certainly arises for Democrats in this study. But the reported treatment effect was driven in larger part by a reaction to an outgroup leader’s expressed stance—a dynamic different than the one at the heart of the original article.

Discussion

Beyond clarifying this part of Barber and Pope’s paper, the specific result should not come as that much of a surprise in the context of related literature. In his 2012 article “Polarizing Cues” in the American Journal of Political Science, Stephen Nicholson uses a survey experiment to find that when party leaders take a position on housing and immigration policies, mass partisans from the out-party move significantly away from this leader’s position. (For example, Republicans oppose an immigration bill substantially more when they hear Obama supports it versus when they don’t hear his position.) Thus, this particularly strong reaction to an outgroup leader cue in Barber and Pope’s robustness study—which likely incited negative partisanship—makes sense.

[As an interesting aside, Nicholson curiously does not find strong evidence for ingroup leader persuasion; partisans don’t follow-the-leader much. This contrasts with Barber and Pope’s main results: as Figure 1 in their paper indicates, Republicans follow their ingroup leader in Trump a considerable amount, but Democrats do not react that negatively to an outgroup leader (Trump) in expressing their policy opinion. Future research should address this uncertainty, paying special attention to 1) cue type (actual leader names? anonymous partisan Congress members? party labels?), 2) study timing (during a campaign? right after one when a president’s policy orientation is not as clear?), and 3) policy areas (will attached source cues be viewed credibly by respondents? do the issues vary by salience level?).]

Do Only Republicans Follow-the-Leader? No

Where does this leave us? To reiterate, the purpose of this robustness study was to check whether the follow-the-leader dynamic was not specific to Republicans (as the main study results may imply) but rather common to all partisans. The experiment does indeed support the idea that Democrats also sometimes follow-the-leader on policy opinion—just not as much as the original results may have indicated.

Moreover, other pieces of evidence support a view of partisan symmetry for this behavior. In the first part of a working paper of mine that builds on Barber and Pope’s article, I evaluate how partisans form their opinion in response to policy positions taken by leaders outside the party mainstream (a liberal position by Trump for Republicans, a conservative one by Obama for Democrats). In both cases, partisans follow their respective leaders. For example, when told Obama has expressed support of a major free trade bill that was previously proposed by Republican legislators, Democrats move 1.11 points more supportive of the bill (on a 1-7 scale) compared to no exposure to an Obama cue.

Second, panel survey evidence by Gabe Lenz in his 2012 book “Follow the Leader? How Voters Respond to Politicians’ Policies and Performance” is also telling. One of the case studies Lenz uses is George W. Bush’s policy proposal to invest Social Security funds in the stock market during the 2000 election (his opponent, Al Gore, opposed it), and how this became the most prominent policy debate during the campaign. From August to late October of 2000—during which the issue became particularly salient—Lenz finds that Gore supporters change their policy opinion most to bring it in line with their leader’s (Gore’s stance of opposition). Given that Gore supporters are more likely to be Democrats, this serves as another example of Democrats following their leader on policy. These pieces of evidence—along with Barber and Pope’s robustness study—thus show the follow-the-leader dynamic cuts across partisan lines.

I recently wrote a post for the Data for Progress blog about historical and recent patterns in the gender gap–with special attention to whether or not women have become more Democratic as of late–across three areas: partisanship, general election vote choice, and midterm election vote choice. You can check out the post here and some of the graphs used in the piece below.